A former attorney at Facebook explains

Imagine crafting an ad only for white people. Maybe you narrow down the targeting to a certain location and slice out certain ages for good measure. That might make sense if you’re trying to sell a cream-colored foundation in your Manhattan boutique, but you’d be ill-advised to try it for advertising housing or jobs — because it’s against the law.

And yet, in 2016, a ProPublica report detailed the ability to use an “ethnic affinity” option in Facebook’s self-service ad tool, allowing businesses to micro-target advertisements based on a variety of immutable demographic categories. A real estate company could use this tool to show ads for available properties only to certain races or gender.

That’s illegal according to the 1968 Fair Housing Act, which clearly prohibits “the sale or rental of a dwelling that indicates any preference, limitation, or discrimination based on race, color, religion, sex, handicap, familial status, or national origin.” At the time of ProPublica’s initial investigation, Facebook said it would update its ad-targeting product but hadn’t as of November 2017, when ProPublica published a follow-up article. Then, in 2018, the ACLU filed a complaint with the Equal Employment Opportunity Commission alleging that Facebook allowed gender-based discrimination for businesses advertising employment opportunities.

It finally seemed that the controversies related to ad targeting would come to a close earlier this month, when the social network announced a settlementwith various civil rights groups, the state of Washington, and other regulators. Facebook definitely declared it would no longer allow advertisers to target specific users by age, gender, or zip code in ads for housing, jobs, or credit.

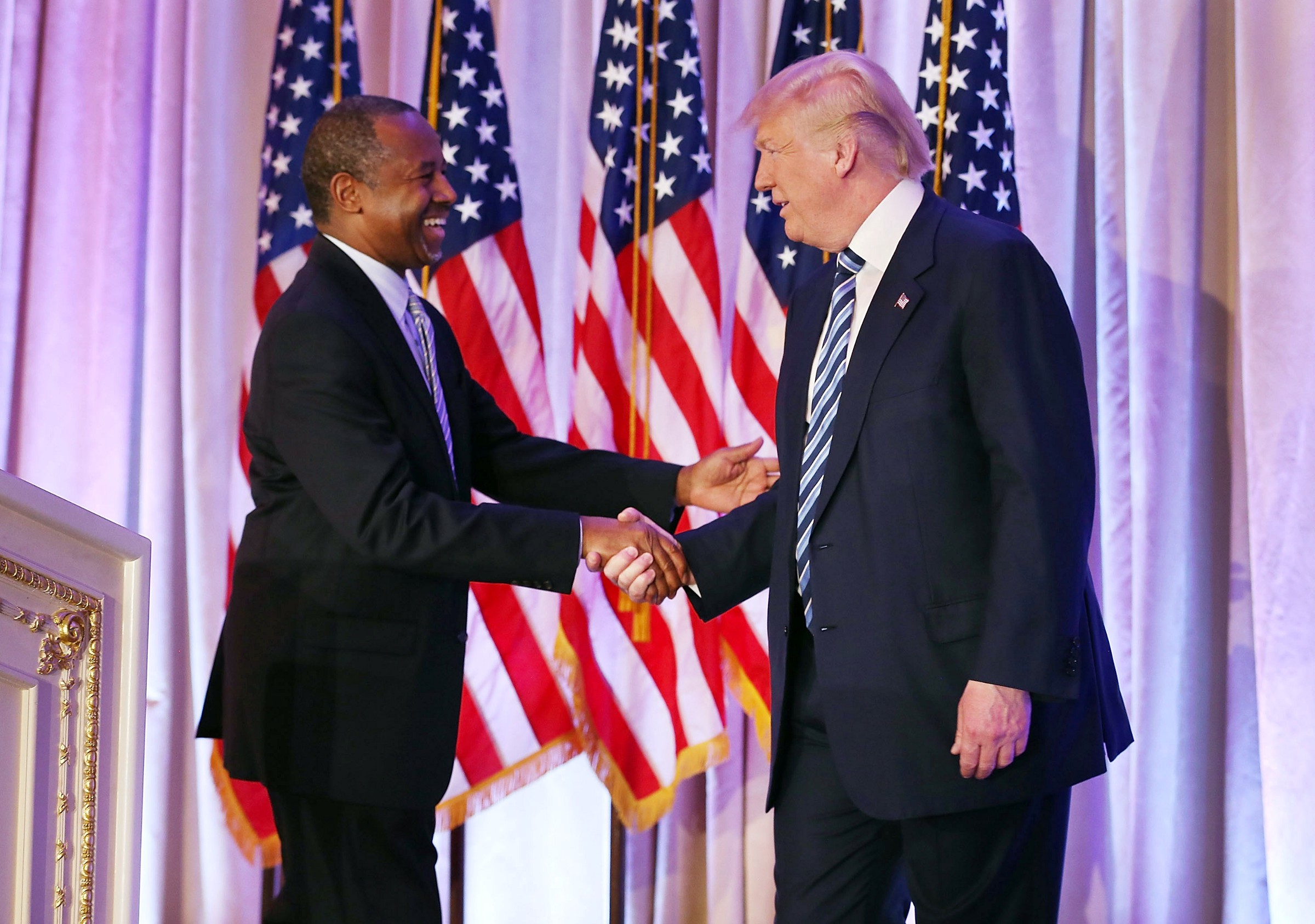

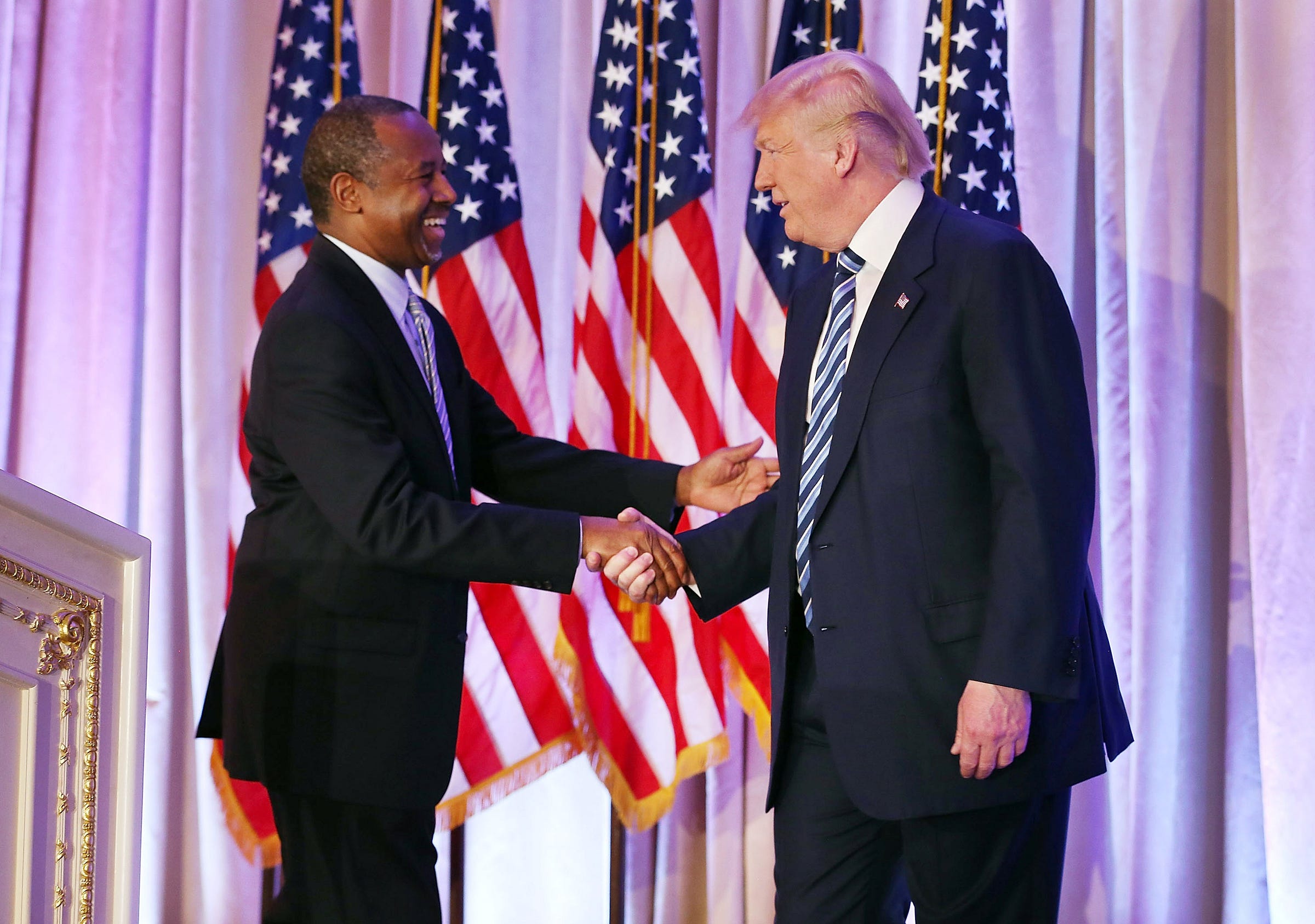

But last Thursday, the Ben Carson–helmed Department of Housing and Urban Development (HUD) filed suit against Facebook, alleging housing discrimination. As HUD Secretary Carson said in a statement, “Facebook is discriminating against people based upon who they are and where they live.” He noted that the ad targeting amounted to a more advanced and surreptitious version of redlining — the practice of denying people access to vital services chiefly because of their race or ethnicity.

“Using a computer to limit a person’s housing choices can be just as discriminatory as slamming a door in someone’s face,” Carson said.

This lawsuit, and the timing, raise two key questions: When is it the responsibility of a platform to make sure a user can’t exploit its tool for nefarious or illegal purposes? And since when does the Trump administration care deeply about discriminatory practices and subversion of the rights of minorities?

Publisher vs. platform: The classic problem

The first question is complicated. Facebook has seen itself as a conduit to “connecting the world.” While the company facilitates the creation and spread of content, it is not itself a content creator. Simply put, Facebook’s party line is that it’s a platform, not a publisher or a media company. But where is that line drawn, and by whom? A key distinction can be found in the definitions of the words, and any good lawyer will tell you that words matter.

A platform, per the Merriam-Webster Dictionary, is “a place or opportunity for public discussion.” It’s a forum and a means to communicate and share ideas, but a platform doesn’t create discussion topics, censor them, or hold any responsibility for the veracity of the information shared. A publisher, however, is “a person or corporation who is engaged in publishing,” benefitting from the “commercial production and issuance of literature, information, musical scores or sometimes recordings, or art.”

While the HUD lawsuit seems altruistic, it may be a Trojan horse that seeks to open the door to greater regulation that treats platforms as publishers.

Comparing the two definitions, you can easily see why Facebook would rather be thought of as a platform. If Facebook is seen as only the tool used to communicate, it is no different than any other communication tool — in this world, Facebook’s ad tool is something like a pen. No one sues Bic for the contents of a letter its users write, nor is the company subject to scrutiny and regulation from the Federal Trade Commission and the Federal Communications Commission. Section 230 of the 1996 Communications Indecency Act specifically holds that platforms cannot be held liable for the content on their services. If Facebook were considered a publisher, it would be responsible for fact-checking all the content distributed on its suite of apps, which it doesn’t fully control. Yes, Facebook has the ability to take down offensive content, but it doesn’t produce or issue it initially. It’s just a distribution channel.

If we classify Facebook as a platform, its main responsibilities and liabilities are to create tools that cannot be manipulated to circumvent someone’s civil liberties or deny them equal access and opportunity to housing, employment, or financial services and to release only products that are thoroughly tested and shown to not have significant negative impact on already marginalized groups. For example, it should not support voter suppression or curate job opportunities for a select and privileged few instead of all. Facebook’s mistakes generally seem to be the result of emphasizing speed and not identifying or thinking through consequences for users in general — and minorities in particular. It must ensure that all voices are at the table when creating and testing products.

Why does the Trump administration care?

As for the Trump administration’s sudden defense of civil rights of marginalized groups, a key issue for Facebook in its dispute with HUD is the agency’s insistence on unfettered access to user data. In a statement following news of the lawsuit, Facebook said, “While we were eager to find a solution, HUD insisted on access to sensitive information — like user data — without adequate safeguards. We’re disappointed by today’s developments, but we’ll continue working with civil rights experts on these issues.”

It is curious that HUD decided to go after Facebook only after the company wouldn’t allow HUD access to user data without proper safeguards.

Facebook’s hesitation isn’t unfounded. Considering this administration has run roughshod over the civil liberties of underrepresented groups — from Muslim bans to separation of children at the border to banning transgender individuals from military service to defunding key educational initiatives — it is easy to look askance at its supposed effort to enforce civil liberties and not undermine them. A quick survey of recent DOJ and administration prosecutions reveals a theme: targeting perceived liberal rivals from Silicon Valley and Hollywood with the college admissions scam; charging Michael Avenatti, a vocal Trump opponent for wire fraud and extortion, and now Facebook, which previously withstood accusations of anti-conservative bias. Prosecutorial discretion can be a powerful political tool, particularly in the hands of those who show a liking for intimidating their adversaries.

While housing discrimination is a true problem, particularly as gentrification and the affordable housing crisis continue, it is curious that HUD decided to go after Facebook only after the company wouldn’t allow HUD access to user data without proper safeguards, especially after accusations of conservative bias against the platform. It is fair to ask what data was requested, how HUD sought to use it, and to what end.

Facebook may be liable for restitution, but it is also heartening to know the company would rather fight than give unrestricted access to user data to an administration that isn’t big on transparency or playing by the rules. Facebook acknowledges that trade-off and its resolution in standing firm, stating, “We’re disappointed by today’s developments, but we’ll continue working with civil rights experts on these issues,” demonstrating that the company’s ethical instincts are in the right place.

While the HUD lawsuit seems altruistic, it may be a Trojan horse that seeks to open the door to greater regulation that treats platforms as publishers. Of course, only time—and perhaps another election—will tell.

Bärí A. Williams is vice president of legal, policy, and business affairs at All Turtles. She previously served as head of business operations in North America for StubHub. She was also lead counsel for Facebook and created its Supplier Diversity program.